42 soft labels machine learning

Data Labeling Software: Best Tools for Data Labeling - Neptune In machine learning and AI development, the aspects of data labeling are essential. You need a structured set of training data that an ML system can learn from. It takes a lot of effort to create accurately labeled datasets. Data labeling tools come very much in handy because they can automate the labeling process, which […] Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

python - scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category.

Soft labels machine learning

Guide to multi-class multi-label classification with neural networks in ... Often in machine learning tasks, you have multiple possible labels for one sample that are not mutually exclusive. This is called a multi-class, multi-label classification problem. Obvious suspects are image classification and text classification, where a document can have multiple topics. Both of these tasks are well tackled by neural networks. Learning of Classification Models from Noisy Soft-Labels by Y Xue · 2016 · Cited by 3 — the problem: learning with soft label information [7, 8], in which ... Proc. of 22nd Int. Conf. on Machine learning, 145-152, (2005). [2] D Freedman et al, ... An introduction to MultiLabel classification - GeeksforGeeks To use those we are going to use the metrics module from sklearn, which takes the prediction performed by the model using the test data and compares with the true labels. Code: predicted = mlknn_classifier.predict (X_test_tfidf) print(accuracy_score (y_test, predicted)) print(hamming_loss (y_test, predicted))

Soft labels machine learning. What is the difference between soft and hard labels? 1 comment 90% Upvoted Sort by: best level 1 · 5 yr. ago Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 5 More posts from the learnmachinelearning community 601 Posted by 2 days ago Tutorial Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Multi-Class Neural Networks: Softmax. Estimated Time: 8 minutes. Recall that logistic regression produces a decimal between 0 and 1.0. For example, a logistic regression output of 0.8 from an email classifier suggests an 80% chance of an email being spam and a 20% chance of it being not spam. Clearly, the sum of the probabilities of an email ... Label Smoothing — Make your model less (over)confident 3 Jun 2021 — By talking about overconfidence in Machine Learning, we are mainly talking about hard labels. Soft label: A soft label is a score which has ... PDF Empirical Comparison of "Hard" and "Soft" Label Propagation for ... tion (SP), propagates soft labels such as class membership scores or probabilities. To illustrate the difference between these approaches, assume that we want to find fraudu- ... arate classification problem for each CoRA sub-topic in Machine Learning category. Despite certain differences between our results for CoRA and synthetic data, we ob-

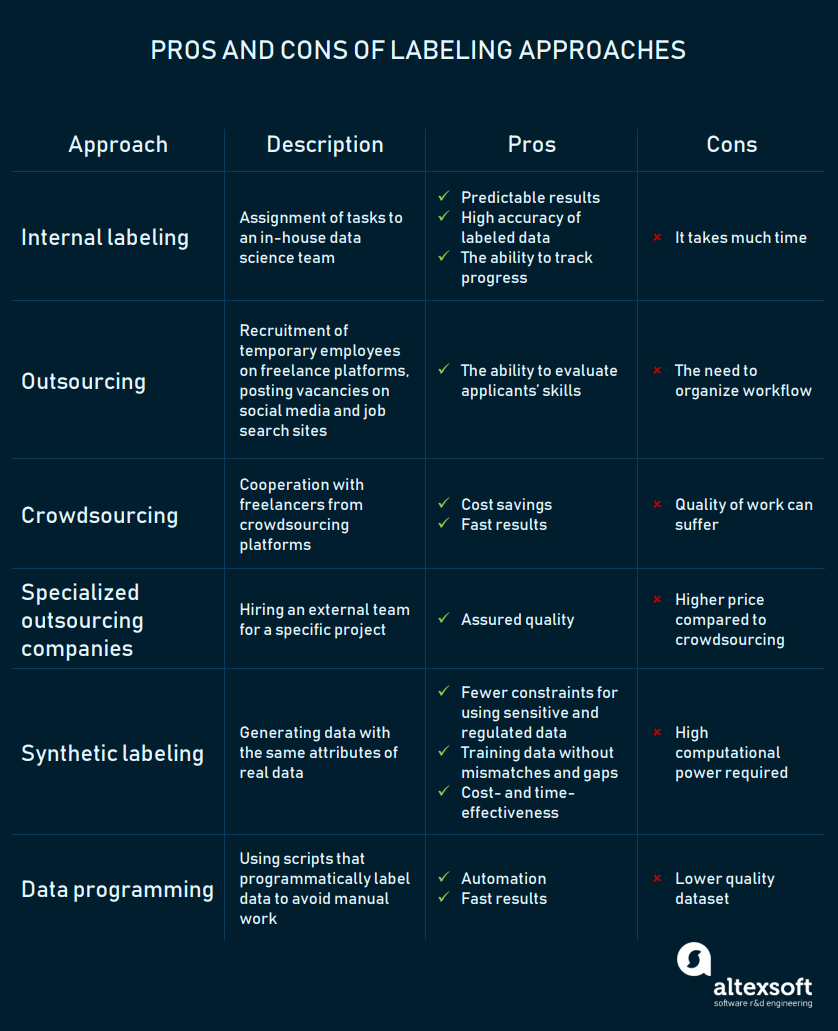

How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning. Semi-Supervised Learning With Label Propagation - Machine Learning Mastery Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data. Label Smoothing - Lei Mao's Log Book In machine learning or deep learning, we usually use a lot of regularization techniques, such as L1, L2, dropout, etc., to prevent our model from overfitting. ... Label smoothing is a regularization technique for classification problems to prevent the model from predicting the labels too confidently during training and generalizing poorly. Is it okay to use cross entropy loss function with soft labels? The sum is taken over the set of possible class labels. In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies.

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability How to Label Data for Machine Learning in Python - ActiveState 2. To create a labeling project, run the following command: label-studio init . Once the project has been created, you will receive a message stating: Label Studio has been successfully initialized. Check project states in .\ Start the server: label-studio start .\ . 3. Labelling Images - 15 Best Annotation Tools in 2022 - Folio3AI Blog This information leads them to find out how the images can be used to their advantage, and then they can plan and perform further tasks, like content moderations, or automatic metadata generation, etc. 5 Free Image Annotation Tools/Software. Plainsight. Markup Hero. Label Studio. LabelD. SuperAnnotate. ARIMA for Classification with Soft Labels | by Marco Cerliani | Towards ... We have soft targets/labels p ∈ (0, 1) (make sure to clip the targets in [eps, 1 - eps] to avoid instability issues when we take logs). Then fit a regression model. Finally, to do inference, we take the sigmoid of the predictions from the regression model. Sigmoid: source Wikipedia

Learn The Colors From The Fun Ice Cream Machine - Kids Learn Colors | Ga... | Learning colors ...

Efficient Learning of Classification Models from Soft-label Information ... soft-label further refining its class label. One caveat of apply- ing this idea is that soft-labels based on human assessment are often noisy. To address this problem, we develop and test a new classification model learning algorithm that relies on soft-label binning to limit the effect of soft-label noise. We

Labeling images and text documents - Azure Machine Learning Sign in to Azure Machine Learning studio. Select the subscription and the workspace that contains the labeling project. Get this information from your project administrator. Depending on your access level, you may see multiple sections on the left. If so, select Data labeling on the left-hand side to find the project. Understand the labeling task

Regression - Features and Labels - Python Programming You have a few choice here regarding how to handle missing data. You can't just pass a NaN (Not a Number) datapoint to a machine learning classifier, you have to handle for it. One popular option is to replace missing data with -99,999. With many machine learning classifiers, this will just be recognized and treated as an outlier feature.

The Ultimate Guide to Data Labeling for Machine Learning - CloudFactory In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

Learning Soft Labels via Meta Learning - Apple Machine Learning Research The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100.

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Learning classification models with soft-label information - PMC by Q Nguyen · 2014 · Cited by 66 — A new classification learning framework that lets us learn from auxiliary soft-label information provided by a human expert is a promising new ...

Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Learning classification models with soft-label information 5 Jul 2022 — ... The soft label methods are typically for binary classification [25] , where the human annotators not only assign a label for an example but ...

PDF Efficient Learning with Soft Label Information and Multiple Annotators Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

A semi-supervised learning approach for soft labeled data by MM El-Zahhar · Cited by 7 — Abstract: In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of ...

machine learning - What are soft classes? - Cross Validated You can't do that with hard classes, other than create two training instances with two different labels: x -> [1, 0, 0, 0, 0] x -> [0, 0, 1, 0, 0] As a result, the weights will probably bounce back and forth, because the two examples push them in different directions. That's when soft classes can be helpful.

Creating targets for machine learning labels - Python Programming Hello and welcome to part 10 (and 11) of the Python for Finance tutorial series. In the previous tutorial, we began to build our labels for our attempt at using machine learning for investing with Python. In this tutorial, we're going to use what we did last tutorial to actually generate our labels when we're ready. Now we're going to create ...

Label Smoothing: An ingredient of higher model accuracy These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, and subsequently, your model will penalize and learn incorrectly by a slightly lesser degree.

An introduction to MultiLabel classification - GeeksforGeeks To use those we are going to use the metrics module from sklearn, which takes the prediction performed by the model using the test data and compares with the true labels. Code: predicted = mlknn_classifier.predict (X_test_tfidf) print(accuracy_score (y_test, predicted)) print(hamming_loss (y_test, predicted))

![Reflections Of The Void: [Links of the Day] 05/12/19 : Tensor Processing Units for Machine ...](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjoa1LwaXkrEaMTfdJSDnxvfPY4yvEy7d5K1zdzWOKHV9NwIsJUA0yAF9HmdPJTZxk3v8e9fpeH9OQUBjOJrchYonzEQ8HJ9E83Mk2BoXjQI6-Z9yf36vw15f2SQijxjGCSjPXhv8Dmc2XT/s1600/giphy+%252815%2529.gif)

Post a Comment for "42 soft labels machine learning"